Say for example we wanted to also change the data type of the some_value column in our test table from integer NOT NULL to bigint NOT NULL. Be Careful with Syntaxīe sure to always specify NULL or NOT NULL when changing data types with ALTER COLUMN. With careful planning, the other steps required can be made as efficient as possible, for example using minimally-logged or ONLINE operations.

Being able to change the type as a metadata-only operation certainly doesn’t hurt. That is a bit outside the scope of this post (though Aaron Bertrand has written about it before). We will still need to drop and recreate any indexes on the column, recreate any referencing foreign keys, and so on. This new ability does not solve all the issues around changing the type of a column with the IDENTITY property. The previous INSERT statement (the one that threw the arithmetic overflow error) now completes successfully. Thanks to the improvements in SQL Server 2016, this command changes metadata only, and completes immediately. Say we have the following heap table using row compression (page compression would also work):ĪLTER TABLE dbo. One very handy application of this improvement is changing the data type of a column with the IDENTITY property. The signed formats can hold -128 to +127 in one byte when compressed. For example, a value of 255 can fit in one byte for tinyint, but requires two bytes in any of the signed formats. This is because it is unsigned, while the other integer types are all signed, so a metadata-only change is not possible. You may notice that tinyint is omitted from the integer types list. Without compression, row store uses the original FixedVar representation, which cannot accommodate these fixed-length data type changes without rewriting the physical layout. These changes can be metadata-only because the underlying binary data layout does not change when Column Descriptor row format is used (hence the need for compression). All of the above only for n The following string and binary type changes are supported:.smallmoney to money (uses integer representation internally).The following integer type changes are supported:.Changing from NULL to NOT NULL is not allowed.The important thing is there are no uncompressed indexes or partitions. Indexes and partitions may use a mixture of these compression levels.On all indexes and partitions, including the base heap or clustered index.The new abilities apply to row-store tables in the following circumstances: The new capabilities target a subset of the fixed-length data types. You get these undocumented improvements for free. No changes to syntax are needed, and no configuration settings need to be modified. The subject of this post is the additional changes that are enabled for metadata-only from SQL Server 2016 onward. Increasing the maximum size of a varchar, nvarchar, or varbinary column (except to max).Going from NOT NULL to NULL for the same data type.There are a number of special cases to watch out for, but as a general summary, the following actions only require changes to metadata: The operation will still require a restrictive schema modification Sch-M lock, but the metadata changes themselves will complete very quickly, regardless of the size of the table. It requires only a few changes to system tables, and a minimal amount of logging. Option 1 represents the ideal case from a performance point of view. Rewrite some or all of the stored data to match the new definition.Check all the existing data for compatibility, then change metadata.It also needs to determine if the current physical layout needs to change.ĭepending on the type of change and the configuration of the database, an ALTER COLUMN command will need to perform one of the following actions: When we ask SQL Server to change some aspect of a column’s definition, it needs to check that the existing data is compatible with the new definition. Ultimately, tables are stored as a sequence of bytes with some metadata elsewhere in the system to describe what each of those bytes mean, and how they relate to each of the table’s various columns. Along with a large number of restrictions on what is even possible with this command, there is always the question of performance. Nevertheless, there is only so much that can be done to hide the underlying complexity. It is certainly more convenient than the alternative: Creating a new table and migrating the data each time a change is necessary.

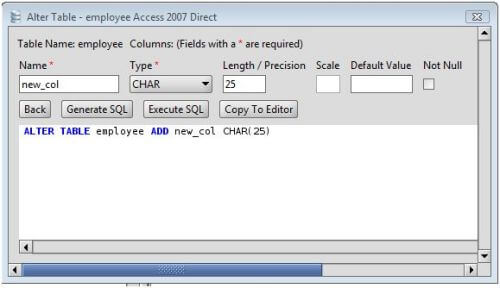

You can use it to change a column’s data type, length, precision, scale, nullability, collation…and many other things besides.

0 kommentar(er)

0 kommentar(er)